[AINews] Bespoke-Stratos + Sky-T1: The Vicuna+Alpaca moment for reasoning • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Reddit Recap

Deepseek R1 Limitations and Model-Agnostic Reasoning Techniques

AI Safety, Ethics, and Regulation

AgentWorkflow and Multi-Agent Solutions

Cohere Discord

DeepSeek R1 Excels in Math Problems

Interconnects (Nathan Lambert) ▷ #

Interconnects: Discussions on AI, Job Displacement, and OpenAI vs Competitors

Discussions on Various AI-related Topics

Discussions on Implementing GPU Papers Collaboratively

NVIDIA Blackwell Codegen and GPU Emulation Insights

Use Cases and Developments in AI Community Discords

Cohere Discussions and Questions

Footer and Social Networks

AI Twitter Recap

AI Twitter Recap

Claude 3.5 Sonnet recaps the latest developments in AI on Twitter:

-

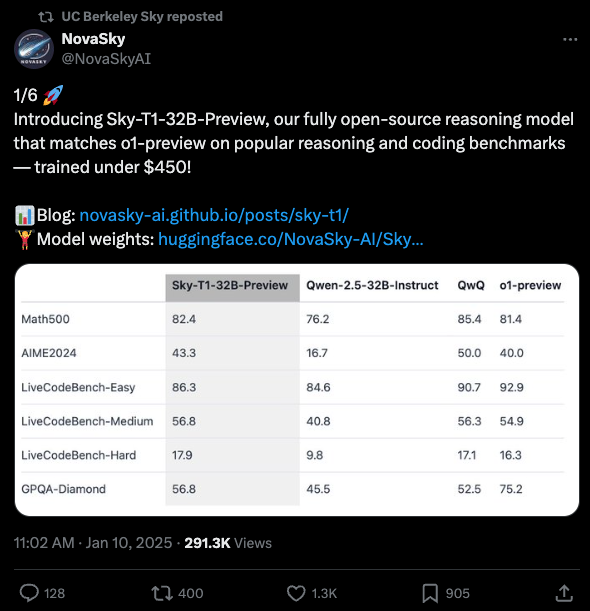

DeepSeek-R1 Innovations and Performance: Discussions on DeepSeek-R1's training via reinforcement learning and its multimodal functionalities, with Bespoke-Stratos-32B introduced as a distilled version.

-

Gemini and Other LLM Advancements: Highlighting Google's Gemini 2.0 Flash Thinking model and its performance across various domains.

-

AI Model Comparisons and Critiques: Insights into models like o1 and R1-Zero, discussing repeatability and limitations.

-

AI Applications and Tools: Showcasing Windsurf for analyzing code and creating slide decks, and introducing llama.cpp server for LLM completions on low-end hardware.

-

AI Integration with Development Tools: Integrations like LlamaIndex with DeepSeek-R1 for AI-assisted development and multi-agent systems.

-

AI Research and Papers: Introduction of IntellAgent multi-agent framework and discussions on behavioral self-awareness in LLMs.

AI Reddit Recap

Theme 1. Mistral 10V: Exploring New Capabilities with 12K Tokens

-

The post highlights the intense competition between the USA and China in the field of AI, specifically mentioning China's advancements in robotics with Mistral 10V.

-

Discussions involved skepticism about authenticity, China's robotics advancement, potential military applications, and job replacements by robots.

-

OpenAI launched Mistral 10V with 12,000 tokens, leading to discussions on the speaker's vocal fry, economic implications of AI, and political undertones.

-

Satirical takes on AI limitations and user interactions were shared, contributing to a humorous perspective on AI advancements.

Theme 2. O1-Pro: Revolutionary Use in Legislation Analysis

- An analysis of Trump's Executive Orders using O1-Pro sparked discussions on short and long-term impacts, fact-checking, and economic concerns.

- 3blue1brown's video on the attention mechanism received praise for its clear explanation and importance of masking during training.

Theme 3. Gemini 1.5: Leading AI with Performance Edge

- Elon Musk's doubts about SoftBank's funding raised skepticism, leading to discussions on legality, economic implications, and Elon Musk's credibility.

- OpenAI's Stargate Project stirred discussions about SoftBank's involvement, project goals, and skepticism surrounding the scale and impact of the project.

Deepseek R1 Limitations and Model-Agnostic Reasoning Techniques

brittleness of Deepseek-R1</strong>, highlighting its limitations and strengths. It performs well in specific scenarios with prompt optimization using a specific structure and parameters. However, it struggles with creative or subjective tasks, requiring precise prompting for improvement. Comparison with other models like Deepseek v3 shows strengths and weaknesses in different task types. Additionally, discussions around model-agnostic reasoning techniques showcase the extraction of reasoning from Deepseek-reasoner to enhance other models' performance. Various workflows, skepticism, and technical implementations are highlighted in utilizing these reasoning techniques.

AI Safety, Ethics, and Regulation

Discussions in this section touch on various aspects of AI safety, ethics, and regulation. Concerns were raised over AI job displacement as a founder expressed moral dilemmas about potential layoffs caused by their AI startup's success. The need for robust safety metrics in AI models like MiniCPM was highlighted, emphasizing ethical AI development. Regulatory challenges in AI were explored, with discussions on winners and losers in the evolving regulatory landscape and concerns about government-corporate overlap in AI development.

AgentWorkflow and Multi-Agent Solutions

LlamaIndex introduced AgentWorkflow, a new framework that builds on LlamaIndex Workflows to support multi-agent solutions. The framework offers expanded tool support and community enthusiasm. Additionally, DeepSeek-R1's performance, comparable to OpenAI's o1, was highlighted within LlamaIndex. Users praised the ease of integration and expressed hopes of scaling it for real-world usage. A detailed guide contributed step-by-step methods to build an open-source RAG system using LlamaIndex, Meta Llama 3, and TruLensML, comparing different approaches and providing performance insights. Community members also discussed parallel task calls in AgentWorkflow and proposed 'nesting workflows' as a solution for enabling parallel tasks in multi-agent pipelines.

Cohere Discord

Various discussions on the Coherence Discord channel include exploring the OpenAI endpoint with code examples, comparing text generation preferences like 'Command-R7b' and 'Aya Expanse 32B,' and highlighting the benefits of the new 'Cohere Command R+ 08-2024' model. Enthusiasts have suggested releasing 'LCoT meme model weights' for comedic text generation in Cohere. Additionally, users have showcased attempts at image-to-video generation and expressed excitement about fueling creative momentum in visual generative workflows.

DeepSeek R1 Excels in Math Problems

The discussion in this section revolves around the performance of the R1 Distill Qwen 32B model, particularly in solving complex contest math problems. Users noted the model's superiority compared to other models like Llama 405B and DeepSeek V3 671B. Additionally, there is a mention of a user encountering difficulties loading the DeepSeek 32B model on a MacBook Pro M1 Max due to issues with the model vocabulary. Suggestions were made for accessing runtimes and updating llama.cpp to resolve this problem. The section also discusses inquiries regarding LMStudio settings on Apple Silicon, quantization settings' impact on model performance and memory usage, and the progress made by users in utilizing LMStudio for AI learning and specific query responses. There is excitement expressed about potential developments that could lead to even lighter AI models in the future.

Interconnects (Nathan Lambert) ▷ #

A lively discussion in the Interconnects channel covers various topics related to AI research and developments. Members engage in debates about notable projects like the Stargate Project, Bespoke-Stratos-32B model, and the Flash Thinking Model. They also delve into concerns over AI data usage policies, alignment between human and AI representations, and the advantages of small models like FLAME. Community members share links to relevant articles and tweets providing additional insights and context to the ongoing discussions.

Interconnects: Discussions on AI, Job Displacement, and OpenAI vs Competitors

Users in the Yannick Kilcher channel discussed the performance of the R1 model, with some impressed by its complexity handling abilities while others noted struggles with simpler tasks. DeepSeek was highlighted as a competitor to OpenAI, offering high-quality outputs at a lower cost, sparking speculation about disrupting the AI market. Ethical concerns arose regarding AI job displacement, with users pondering moral dilemmas around AI startups causing potential job losses. OpenAI's rivalry with Elon Musk and allegations of allegiance to America were discussed, along with implications of investments like the Stargate Project's $500 billion in AI infrastructure and concerns over corporate influences in government decisions.

Discussions on Various AI-related Topics

Discussions in this section cover various AI-related topics including the performance of DeepSeek R1 compared to other models, challenges in recruiting reviewers for paper reviews, and concerns about censorship in AI models. Additionally, there are mentions of new AI projects such as IntellAgent for evaluating conversational agents, the Stargate Project by OpenAI focusing on advanced AI interactions, and the launch of the Sonar and Sonar Pro API by Perplexity AI for generative search capabilities. Users also share insights on the upcoming Sonar Pro API deployment, model comparisons, and issues related to API performance and GDPR compliance in Europe. The discussions highlight the ongoing advancements and challenges in the AI landscape.

Discussions on Implementing GPU Papers Collaboratively

Discussions in the GPU MODE > triton section revolved around addressing issues with TMA implementation crashes when using autotune, confusion regarding persistent Matmul descriptors, TRITON_INTERPRET behavior altering kernel execution, and the impact of data dependencies on Triton kernels. Users also discussed the order of operations affecting results and called for collaboration on implementing challenging GPU-related papers. The conversation emphasized the importance of collaborative development efforts to tackle complex GPU algorithms.

NVIDIA Blackwell Codegen and GPU Emulation Insights

The discussion in this section focused on the upcoming NVIDIA Blackwell Codegen for Blackwell B100/B200 and RTX 50, with uncertainties about sm_100a and sm_101a targets. There was curiosity about GPU emulation using LeetGPU.com on CPUs, generating interest in the method used. The Accel-Sim Framework was highlighted for simulating programmable accelerators. Users expressed surprise at the delay in the Blackwell whitepaper release and excitement for an upcoming talk on the Accel-Sim framework. The community showed anticipation for learning more about the new architecture's release details and explored STF experimentation, showcasing ongoing exploration of newer technologies in GPU development.

Use Cases and Developments in AI Community Discords

This section highlights various discussions and activities from different Discord channels within the AI community. Topics range from model performance comparisons to troubleshooting technical issues, and from the exploration of new AI functionalities to the challenges faced in different AI projects. Users across these channels engage in discussions about model optimization, AI applications in different fields like cybersecurity, and the challenges and successes encountered in using AI tools for various tasks. Link sharing is prevalent for further reference on models, performance metrics, and tools discussed in the conversations.

Cohere Discussions and Questions

This section covers discussions and questions from the Cohere Discord channel. It includes topics such as OpenAI API integration, text generation model recommendations, research assistance requests, Cohere's model release suggestion, explorations in image to video generation, and the sunsetting of a channel to streamline support processes. Additionally, there are discussions on issues with the Cohere Command R+ model, challenges in obtaining an API key, and calls for free unlimited access to ChatGPT. The section also highlights interest in DeepSeek R1 models, API key challenges, language barriers, and updates on GPT4All.

Footer and Social Networks

The footer section of the webpage includes links to find AI news on social networks such as Twitter and through newsletters. It also mentions that the content is brought to you by Buttondown, a platform for starting and growing newsletters.

FAQ

Q: What are some key advancements in AI discussed in Claude 3.5 Sonnet's Twitter recap?

A: Some key advancements in AI discussed include innovations in DeepSeek-R1 with reinforcement learning training, Gemini 2.0 Flash Thinking model by Google, comparisons of AI models like o1 and R1-Zero, AI applications like Windsurf and llama.cpp server, and AI integration with development tools like LlamaIndex with DeepSeek-R1.

Q: What themes are explored in Mistral 10V: Exploring New Capabilities with 12K Tokens?

A: Themes explored include the intense competition between the USA and China in AI, Mistral 10V's launch with 12,000 tokens, skepticism about China's robotics advancement and potential military applications, and discussions on AI limitations and user interactions.

Q: What is discussed about O1-Pro in the context of legislation analysis?

A: Discussions focus on the analysis of Trump's Executive Orders using O1-Pro, sparking debates on impacts, fact-checking, economic concerns, and highlighting 3blue1brown's video on the attention mechanism.

Q: What skepticism and discussions arise around Gemini 1.5 in the Twitter recap?

A: Skepticism is raised due to Elon Musk's doubts about SoftBank's funding, discussions on legality and economic implications, as well as debates on SoftBank's involvement in OpenAI's Stargate Project.

Q: What are the limitations and strengths of Deepseek-R1 highlighted in the Twitter recap?

A: Deepseek-R1 is noted for performing well in specific scenarios with prompt optimization but struggles with creative or subjective tasks. It requires precise prompting for improvement and is compared with other models like Deepseek v3, showcasing strengths and weaknesses in different task types.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!