[AINews] TinyZero: Reproduce DeepSeek R1-Zero for $30 • ButtondownTwitterTwitter

Chapters

AI Twitter and Reddit Recap

DeepSeek-R1 Success and Community Excitement

Turkish Qwen 2.5 Tuning Hits 3X Speed Tradeoff

Discord Community Highlights

AI in Coding Discussions

Discussion on AI Models and Tools

Latent Space and AI General Discussion

AI Benchmarking and GPU Specs Discussion

Interconnects (Nathan Lambert) Retort Podcast 8 Messages

OpenAI & Nous Research AI Discussions

AI and Model Discussions

A Member's Introduction to the MAX Builds Page and Package Creators

AI News on Social Networks and Sponsors

AI Twitter and Reddit Recap

The section provides a detailed recap of AI-related discussions and updates from Twitter and Reddit. The Twitter recap includes insights on AI model evaluations, DeepSeek-R1 performance, AI agents and applications, company news, technical challenges and solutions, and academic and research progress. It also covers humorous moments and critiques related to AI. The Reddit recap covers highlights from /r/LocalLlama. Overall, the section offers a comprehensive overview of the recent AI-related conversations and developments from these platforms.

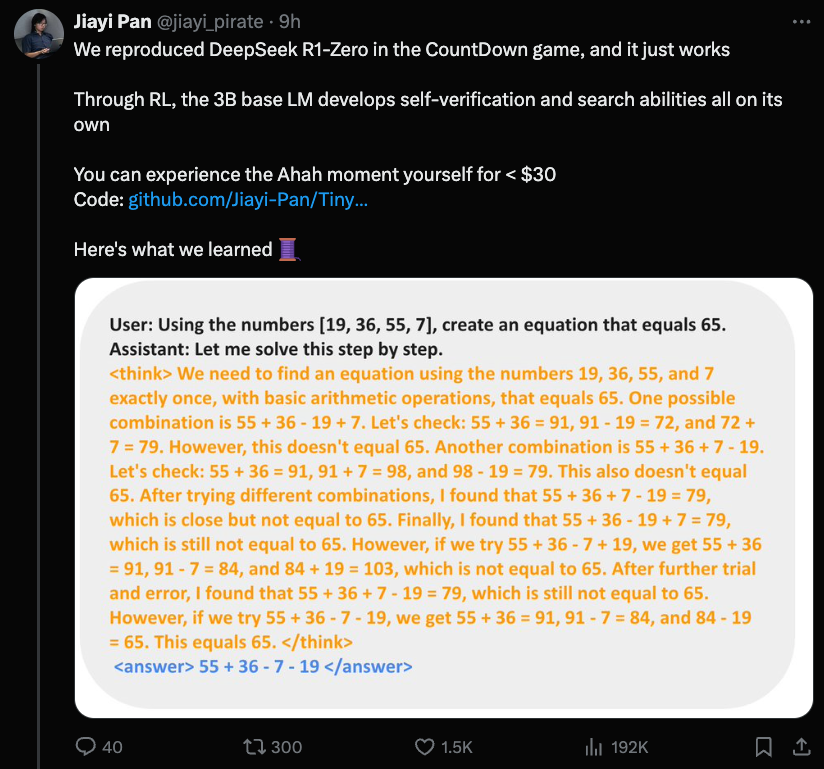

DeepSeek-R1 Success and Community Excitement

DeepSeek-R1 Success and Community Excitement

-

Recognition on LMSYS Arena Leaderboard: DeepSeek-R1 listed on the LMSYS Arena Leaderboard signifies its recognition and potential in AI benchmarking. Community appreciates its capability and relevance in the AI community.

-

MIT License Significance: DeepSeek-R1 stands out for being the only model with an MIT license on the leaderboard, emphasizing its open-source nature and flexibility.

-

Leaderboard Preferences: Users question the leaderboard's rankings, suggesting bias towards models like GPT-4o and Claude 3.6 due to training on human preference data.

-

Open Source Achievement: Impressions of DeepSeek-R1 being open-source and ranking highly on the leaderboard, with comparisons to another open-source model, 405b.

-

Notes on Deepseek r1 Comparison with OpenAI o1: DeepSeek-R1 rivals OpenAI's o1 in creative writing at a lower cost, while excelling in uncensored output. Discussions highlight its impact and capabilities, alongside discussions on censorship, open-source implications, and industry dynamics.

Benchmarking Sub-24GB AI Models

- Comprehensive Benchmark Analysis: An analysis of AI models fitting in 24GB VRAM showcases model performance differences across various tasks, with insights on Llama 3.3, Phi-4, and Mistral Nemo.

- Methodology and Tools: Benchmarks conducted using H100 and vLLM, with discussions on color coding thresholds and community requests for benchmarks on 12GB and 8GB VRAM models.

- Community Requests and Contributions: Interest in benchmarks for smaller VRAM models, benchmarking code sharing for reproducibility, and discussions on model underperformance due to quantization.

Turkish Qwen 2.5 Tuning Hits 3X Speed Tradeoff

A user fine-tuned the Qwen 2.5 model for Turkish speech accuracy with LoRA, referencing grammar gains and Unsloth’s continued pretraining docs. They reported up to 3x slower performance using Unsloth’s integration in Llama-Factory but praised the UI benefits, highlighting a tradeoff between speed and convenience.

Discord Community Highlights

The Discord community discussions span a wide range of topics, from updates on AI assistants like Perplexity to debates on AI models like Gemini and ChatGPT. Users share insights on AI-developed drugs and the integration of different tools and APIs. There are also discussions on hardware advancements like NVIDIA's RTX 5090 and the challenges of running demanding models. The Discord platforms of various organizations like LM Studio, aiden (Paul Gauthier), Interconnects, and GPU MODE also provide valuable insights into new features, debates, and user experiences. The topics range from technical discussions to industry trends and community interactions.

AI in Coding Discussions

Community members in the Cursor IDE channel discussed a range of topics related to AI in coding:</br>- DeepSeek R1's success on benchmark tests and cost reduction.</br>- Mixed user experiences and reliability issues with the Cursor platform.</br>- Reflections on AI as a coding assistant requiring oversight for code quality.</br>- Updates on the latest Cursor version 0.45.2 and its features.</br>- Observations on industry changes due to open-source models like DeepSeek R1.

Discussion on AI Models and Tools

The section covers various discussions related to AI models and tools including Unsloth AI's developments in language accuracy, integration performance, and continued pretraining. It also highlights the advantages of posit computing and the success of Evo model in nucleotide prediction. On the Codeium (Windsurf) side, updates and features like web search and demo video launch are discussed, along with user issues and account registration problems. Additionally, it mentions the release of Windsurf 1.2.2 with enhanced features. Lastly, OpenRouter announcements include updates on DeepSeek R1 message patterns and the temporary deranking of DeepSeek provider due to an outage.

Latent Space and AI General Discussion

This section discusses various topics related to AI technologies and developments, particularly focusing on recent advancements and innovations within the tech industry. Members express excitement for the Model Context Protocol (MCP) and its potential in integrating AI capabilities. They also explore sharing resources and upcoming events related to AI technologies. Additionally, there are discussions on user frustrations with Perplexity AI, comparing it to other AI models like ChatGPT, and exploring alternatives to enhance user experience. Overall, the section provides valuable insights into the current trends and challenges in the AI landscape.

AI Benchmarking and GPU Specs Discussion

AI benchmarking reveals VRAM limitations:

- Concerns raised about smaller models' utilization of memory bandwidth, particularly on older GPUs like the 1080 Ti without AVX2.

- Suitable benchmarks depend heavily on VRAM capacity and bandwidth for optimal performance.

GPU and memory specs critical for AI tasks:

- Importance of memory speed and type in older hardware setups when running AI models discussed.

- Challenges highlighted when mixing high-performance servers with outdated NVIDIA cards like the P40.

Challenges with current LLM implementations:

- Frustration expressed with consumer hardware for LLMs, seen as insufficient for high-performance tasks.

- Acknowledgment that corporations may offer better opportunities through AI inference APIs due to their capacity to subsidize costs.

Interconnects (Nathan Lambert) Retort Podcast 8 Messages

Interconnects (Nathan Lambert) Retort Podcast 8 Messages

-

Adobe Podcast 'Enhance Speech' is a mixed bag: Users found that the tool can sound robotic, especially for multi-person setups, highlighting the need for quality mic setups.

- While effective for solo recordings, issues arise in group settings.

-

Multi-Person Audio Setup is Costly and Complex: Using quality equipment like Rode Podmic and Rodecaster for good audio in group interviews can be expensive and labor-intensive.

- Striving for studio-like quality comes with the complexity of setup.

-

Avoiding 'Magic Audio' Techniques: A preference against 'magic audio' tools that compromise sound authenticity.

- Emphasizing the value of good audio quality over processed sounds.

-

Limited Audio Efforts for One-on-One Interviews: Focusing on one-on-one interviews to lessen audio production effort while maintaining quality sound.

- Recognizing the importance of quality in audio production even with minimal effort.

OpenAI & Nous Research AI Discussions

This section covers various discussions and announcements from the OpenAI community including updates on Canvas integration, HTML & React code rendering, and ChatGPT desktop app rollout. It also delves into topics such as AI in game development, concerns about AI's internet access, and features of the Operator tool. Additionally, insights from discussions in the Nous Research AI channel include advancements in AI reasoning models, the importance of self-attention in AI, and the potential of DiStRo for enhanced GPU training. The section also highlights Yannick Kilcher's discussions on topics such as memory bus width, math questions for language models, and challenges faced by LLMs in visual reasoning tasks.

AI and Model Discussions

The section discusses various AI concepts and models. It covers a humorous reference to an 'AI gamer waifu', AI's growing presence in entertainment and gaming. It also delves into discussions around AI agents, task management, generative output, AGI themes, AI animation workflow, and AI model compatibility. Additionally, there are talks about the release of GPT4All v3.7.0, including Windows ARM support, macOS bug fixes, code interpreter improvements, and chat templating upgrades. The conversations touch upon challenges with AI model exports due to US regulations, concerns related to Oracle Japan and Cohere's compliance, the market impact of GPU restrictions, and Cohere's operations from Canada. Moreover, discussions include operations costs of using Blackwell technologies and the relevance of AI model export regulations. Finally, member interactions about forum post creation and asynchronous coding in Mojo emphasize collaborative efforts within the community.

A Member's Introduction to the MAX Builds Page and Package Creators

- The MAX Builds page has been launched, featuring a dedicated section for community-built packages, encouraging more community involvement and contributions.

- Special recognition is given to inaugural package creators, setting the stage for future contributions and package sharing.

- Instructions are provided on how to feature a project on the MAX Builds page by submitting a PR to the Modular community repo with a recipe.yaml file, promoting an easy pathway for community engagement.

AI News on Social Networks and Sponsors

This section discusses where to find AI News on different platforms like Twitter and their newsletter. It also mentions that AI News is brought to you by Buttondown, a platform to start and grow your newsletter.

FAQ

Q: What is DeepSeek-R1 and why is it significant?

A: DeepSeek-R1 is an AI model listed on the LMSYS Arena Leaderboard known for its potential and relevance in AI benchmarking. It stands out for being the only model with an MIT license, emphasizing its open-source nature and flexibility.

Q: How does DeepSeek-R1 compare to OpenAI's o1?

A: DeepSeek-R1 rivals OpenAI's o1 in creative writing at a lower cost while excelling in uncensored output. Discussions highlight its impact, capabilities, open-source implications, and industry dynamics.

Q: What are the benchmarking challenges discussed in the AI community?

A: The AI community discusses concerns about VRAM limitations for smaller models, memory speed importance for AI tasks, and challenges with current implementations of Language Model Models (LLMs) on consumer hardware.

Q: What are the discussions surrounding AI technologies and developments?

A: Discussions include excitement for the Model Context Protocol (MCP), frustrations with Perplexity AI, comparisons with ChatGPT, advancements in AI reasoning models, and DiStRo's potential for enhanced GPU training.

Q: How do users feel about the 'Enhance Speech' tool discussed in the Adobe Podcast?

A: Users found the 'Enhance Speech' tool to sound robotic, especially in group settings, emphasizing the need for quality mic setups. Expensive and labor-intensive equipment like Rode Podmic and Rodecaster are suggested for studio-like audio quality in group interviews.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!